Autonomous Weapons & Human Control

10 May 2016

By Paul Scharre, Kelley Sayler for Center for a New American Security (CNAS)

This brief was external pageoriginallycall_made published by the external pageCenter for a New American Security (CNAS)call_made on 7 April 2016.

Human judgment and control in lethal attacks

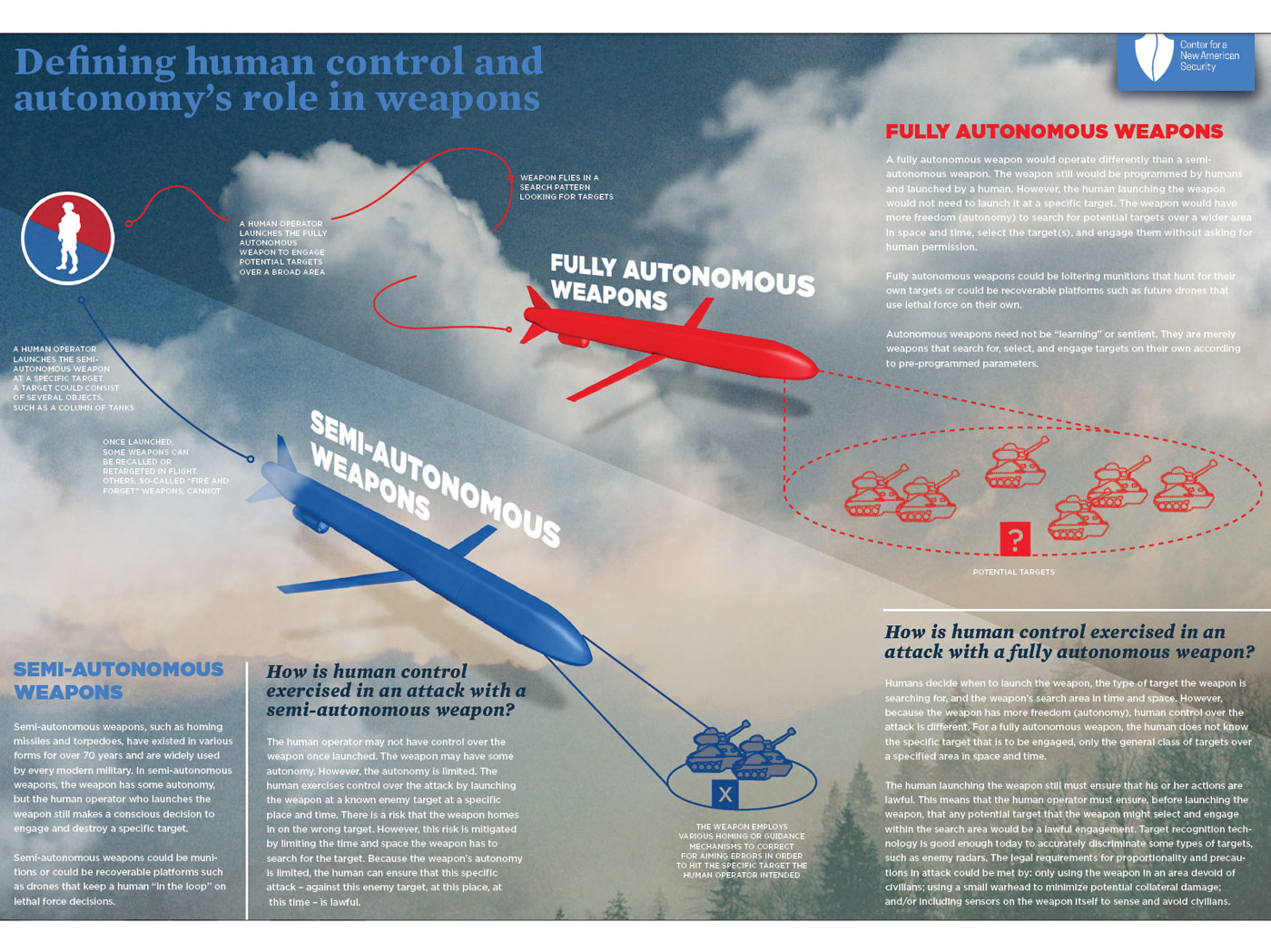

Increasing autonomy in weapons raises the question of how much human involvement is required in lethal attacks. Some have called for “meaningful human control” over attacks. Others have suggested a standard of “appropriate human judgment.” Regardless of the terms used, many agree that humans should be involved in lethal force decisions at some level.

Automation and autonomy are already used for a wide range of functions in weapons, including searching for and identifying potential targets, tracking targets, cueing them to human operators, timing when to fire, and homing in on targets once launched. Generally, humans decide which specific targets are to be engaged.

Humans currently perform three kinds of roles with respect to target selection and engagement, sometimes performing multiple roles simultaneously.

- The human as essential operator: The weapon system cannot accurately and effectively complete engagements without the human operator.

- The human as moral agent: The human operator makes value-based judgments about whether the use of force is appropriate—for example, whether the military necessity of destroying a particular target in a particular situation outweighs the potential collateral damage.

- The human as fail-safe: The human operator has the ability to intervene and alter or halt the weapon system’s operation if the weapon begins to fail or if circumstances change such that the engagement is no longer appropriate.

As machine intelligence capabilities increase, humans may not be needed as essential operators of future weapon systems. However, humans still may be needed to make value-based moral decisions and/or act as fail-safes in the event that the weapon system fails.

Rather than focus on where humans are needed today based on existing technology, we ought to ask: If technology was sufficiently advanced to make lethal force decisions in a lawful manner, are there tasks for which human judgment still would be required because they involve decisions that only humans should make? Do some decisions require uniquely human judgment? If so, why?

Defensive Human-Supervised Autonomous Weapons

At least 30 nations currently have defensive human-supervised autonomous weapons to defend against short-warning saturation attacks from incoming missiles and rockets. These are required for circumstances in which the speed of engagements can overwhelm human operators.

Once activated and placed into an autonomous mode, these weapon systems can search for, select, and engage incoming threats on their own without further human intervention. Humans supervise the weapon’s operation and can intervene to halt the engagements if necessary. To date, human-supervised autonomous weapons have been used in limited circumstances to defend human-occupied vehicles or bases. Because humans are co-located with the system and have physical access, in principle they could manually disable the system if necessary if it begins malfunctioning.

Why might militaries build autonomous weapons?

A frequent argument for autonomous weapons is that they may behave more lawfully than human soldiers on the battlefield, reducing civilian casualties. It is true that machines’ abilities at object recognition are rapidly improving and may soon surpass humans’ ability to accurately identify objects. Automated target identification tools may help to more accurately discriminate military from civilian objects on the battlefield, reducing civilian casualties. Adding automation does not necessarily equate to removing human involvement, however. Militaries could design weapons with more advanced automatic target identification and still retain a human in the loop to exercise human judgment over each engagement.

Autonomous weapons may be desired because of their advantages in speed. Without a human in the loop, machines potentially could engage enemy targets faster. At least 30 nations already use human-supervised autonomous weapons to defend human-occupied vehicles and bases for this reason.

Militaries also could desire autonomous weapons so that uninhabited (“unmanned”) vehicles can select and engage targets even if they are out of communications with human controllers. A number of major military powers are building combat drones to operate in contested areas where communications with human controllers may be jammed.

Does automation increase or decrease human control?

Automation changes the nature of human control over a task or a process by delegating the performance of that task to a machine. In situations where the machine can perform the task with greater reliability or precision than a person, this can actually increase human control over the final outcome. For example in a household thermostat, by delegating the task of turning on and off heat and air conditioning, humans improve their control over the outcome: the temperature in the home.

Some tasks lend themselves more easily to automation. Tasks that have an objectively correct or optimal outcome and that occur in controlled or predictable situations may be suitable for automation. Tasks that lack a clear “right” answer, that depend on context, or that occur in uncontrolled and unpredictable environments may not be as suitable for automation.

Some engagement-related tasks in war have a “right” answer. Object identification is a potentially fruitful area for automation. Recent advances in machine intelligence have shown tremendous promise in object recognition.

Some engagement-related tasks may not have an objectively right answer and may depend on context or value-based decisions. Whether a person is a combatant may depend heavily on context and their specific actions. Proportionality considerations may similarly depend on ethical and moral judgment.

Image: Defining human control and autonomy's role in weapons (click to enlarge)